Intel and AMD have been the two primary processor companies for more than 50 years now. Although both use the x86 ISA to design their chips, over the last decade or so, their CPUs have taken completely different paths. In the mid-2000s, with the introduction of the Bulldozer chips, AMD started losing ground against Intel. A combination of low IPC and inefficient design almost drove the company into the ground. This continued for nearly a decade. The tables started turning in 2017 with the arrival of the Zen microarchitecture.

The new Ryzen processors marked a complete re-imagining of AMD’s approach to CPUs, with a focus on IPC, single-threaded performance, and, most notably, a shift to an MCM or modular chiplet design. Intel, meanwhile, continues to do things more or less exactly as they’ve done since the arrival of Sandy Bridge in 2011.

It all Started with Zen

First and second-gen Ryzen played spoiler to Intel’s midrange efforts by offering more cores and more threads than parts like the Core i5-7600K. But a combination of hardware-side issues like latency, and a lack of Ryzen-optimized games meant that Intel still commanded a significant performance lead in gaming workloads.

Things started to improve for AMD in the gaming section with the introduction of the Zen 2 based Ryzen 3000 CPUs, and Intel’s gaming crown was finally snatched with the release of the Zen 3 based Ryzen 5000 CPUs. A drastic improvement to IPC meant that AMD was able to offer more cores, but also match Intel in single-threaded workloads. Buying into Skylake refresh-refresh-refresh-refresh wouldn’t necessarily net you better framerates.

AMD and Intel have (or used to have) fundamentally different processor design philosophies. Here’s an annoying elementary school analogy that might help you understand the difference. Which one’s more fruit: A watermelon or a kilo of apples? One’s a really big fruit. And the other’s, well, a lot of small fruit. You’ll want to keep that in mind as we take a deep dive here in the next section.

Intel Monolithic Processor Design vs AMD Ryzen Chiplets

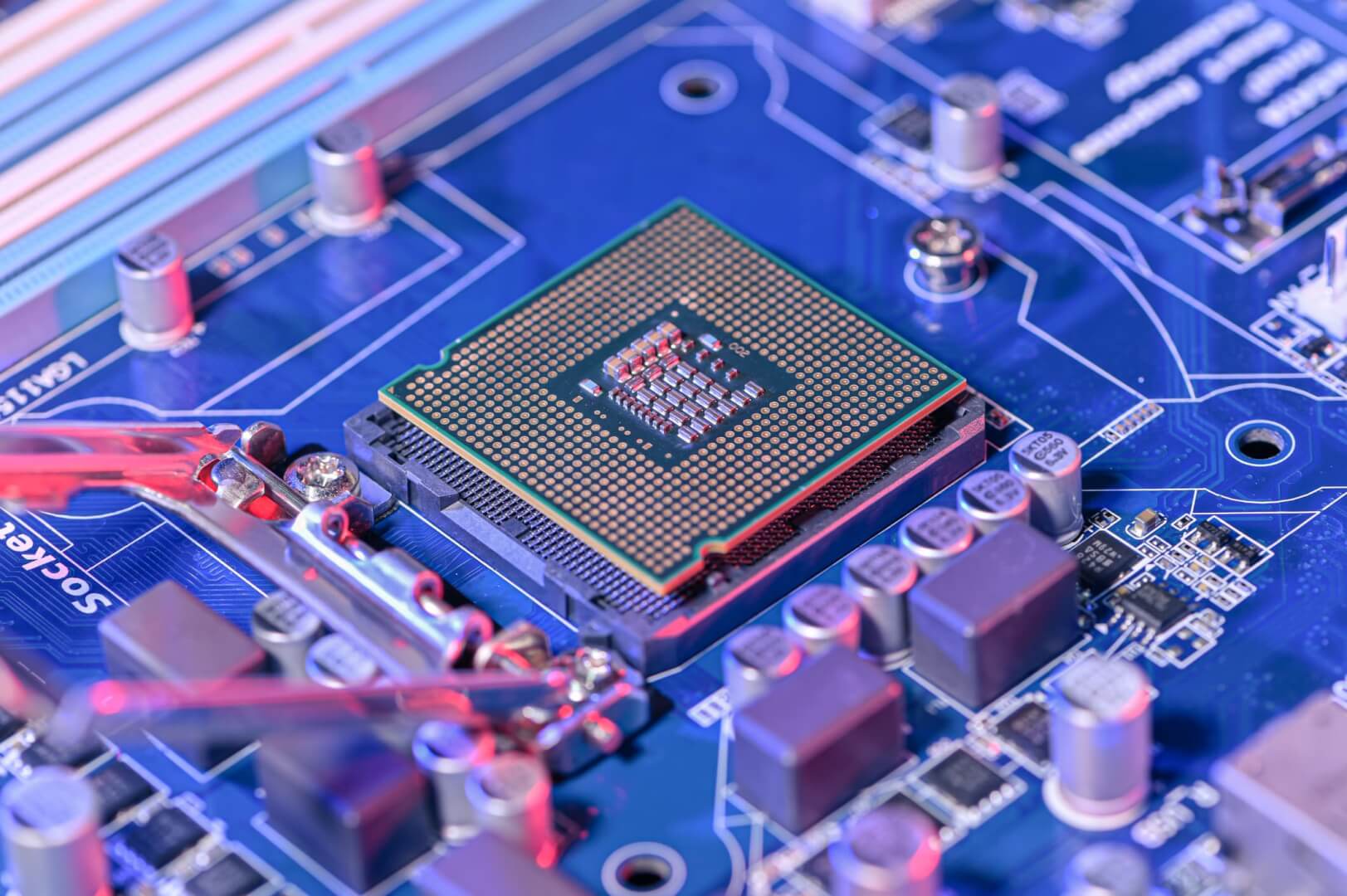

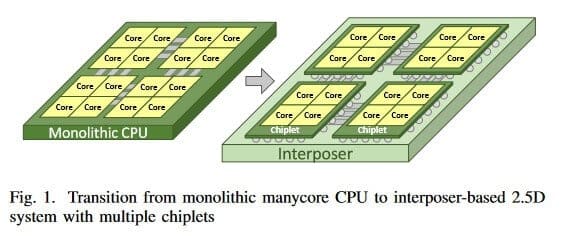

Intel follows what’s called a monolithic approach to processor design. What this means, essentially, is that all cores, cache, and I/O resources for a given processor are physically on the same monolithic chip. There are some clear advantages to this approach. The most notable is reduced latency. Since everything is on the same physical substrate, different cores take much less time to communicate, access the cache, and access system memory. Latency is reduced. This leads to optimal performance.

If everything else is the same, the monolithic approach will always net you the best performance. There’s a big drawback, though. This is in terms of cost and scaling. We need to take a quick look now at the economics of silicon yields. Strap in: things are going to get a little complicated.

Monolithic CPUs Offer Best Performance but are Expensive and…

When foundries manufacture CPUs, (or any piece of silicon for that matter) they almost never manage 100 percent yields. Yields refer to the proportion of usable parts made. If you’re on a mature process node like Intel’s 14nm+++, your silicon yields will be in excess of 70%. This means you get a lot of usable CPUs. The inverse, though, is that for every 10 CPUs you manufacture, you have to discard at least 2-3 defective units. The discarded unit obviously cost money to make, so that cost has to factor into the final selling price.

At low core counts, a monolithic approach works fine. This in large part explains why Intel’s mainstream consumer CPU line has, until recently, topped out at 4 cores. When you increase core count though, the monolithic approach results in exponentially greater costs. Why is this?

On a monolithic die, every core has to be functional. If you’re fabbing an eight-core chip and 7 out of 8 cores work, you still can’t use it. Remember what we said about yields being in excess of 70 percent? Mathematically, that ten percent defect rate stacks for every additional core on a monolithic die, to the point that with, say a 20-core Xeon, Intel actually has to throw away one or two defective chips for every usable one, since all 20 cores have to be functional. Costs don’t just scale linearly with core count–they scale exponentially because of wastage.

Furthermore, when expanding your 14nm capacity, the newly started factories won’t have the same level of processor yields as existing ones. This has already led to Intel’s processor shortages and the resulting F series CPUs.

The consequence of all this is that Intel’s process is price and performance competitive at low core counts, but just not tenable at higher core counts unless they sell at thin margins or at a loss. It’s arguably cheaper for them to manufacture dual-core and quad-core processors than it is for AMD to ship Ryzen 3 SKUs. We’ll get to why that is now.

Chips, Chiplets and Dies

AMD adopts a chiplet-based or MCM (Multi-chip Module) approach to processor design. It makes sense to think of each Ryzen CPU as multiple discrete processors stuck together with superglue–Infinity Fabric in AMD parlance. One Ryzen CCX features a 4-core/8-core processor, together with its L3 cache. Two CCXs (single 8-core CCX with Zen 3) are stuck together on a CCD to create a chiplet, the fundamental building block of Zen-based Ryzen and Epyc CPUs. Up to 8 CCDs can be stacked on a single MCM (multi-chip module), allowing for up to 64 cores in consumer Ryzen processors such as the Threadripper 3990X.

There are two big advantages to this approach. For starters, costs scale more or less linearly with core counts. Because AMD’s wastage rate is relative to its being able to create a functional 4-core block at most (a single CCX), they don’t have to throw out massive stocks of defective CPUs. The second advantage comes from their ability to leverage those defective CPUs themselves. Whereas Intel just throws them out, AMD disables functional cores on a per-CCX basis to achieve different core counts.

For example, both the Ryzen 7 5800X and 5600X feature a single CCD with eight cores. The latter has two cores disabled, giving it 6 functional cores instead of eight. Naturally, this allows it to sell six-core parts at more competitive prices than Intel.

There is a big drawback to the chiplet approach: latency. Each chiplet is on a separate physical substrate. Because of the laws of physics, this means that Ryzen CPUs incur a latency penalty for communication over the Infinity Fabric. This was most noticeable with first-gen Ryzen. Infinity Fabric speeds correlated to memory clocks and overclocking your memory, therefore, resulted in noticeably faster CPU performance.

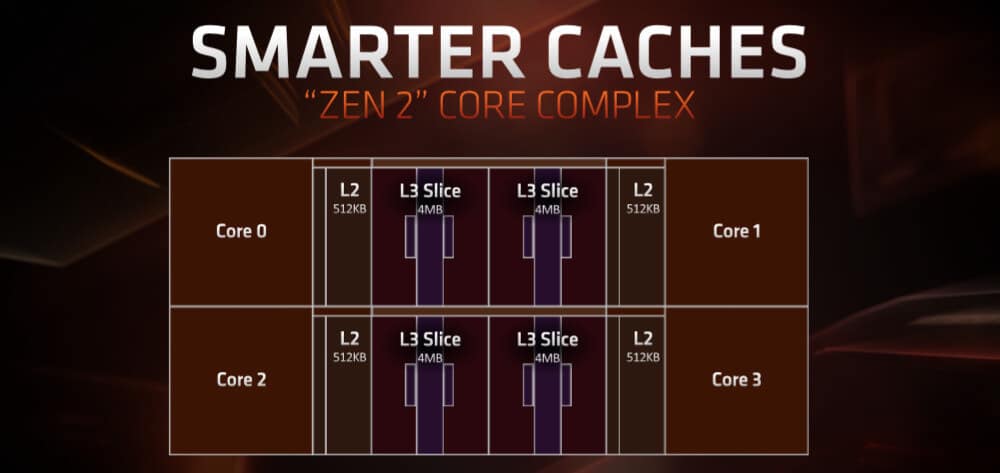

AMD managed to rectify this with the Ryzen 3000 CPUs and then further improve it with the newly launched Ryzen 5000 lineup. The former introduced a large L3 cache buffer, called “game cache”. L3 cache is the intermediary between system memory and low-level CPU core cache (L1 and L2). Typically, consumer processors have a small amount of L3–Intel’s i7 9700K, for instance, has just 12 MB of L3. AMD, however, paired the 3700X with 32 MB of L3 and the 3900X with a whopping 64 MB of L3.

The L3 cache is spread evenly between different cores. The increased amount of cache means that, with a bit of intelligent scheduling, cores can cache more of what they need. The buffer eliminates most of the latency penalty incurred over Infinity Fabric.

The Ryzen 5000 CPUs went a step ahead and eliminated the four core CCXs in favor of eight core complexes, with each core directly connected to every other on the CCX/CCD. This improves the inter-core latency, cache latency, and bandwidth as well as provides each core with twice as much L3 cache, significantly improving gaming performance:

Chiplet or Monolithic: Which is Better?

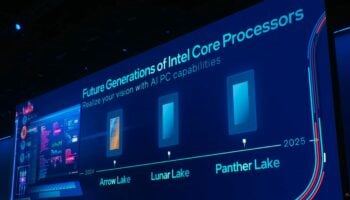

The chiplet approach going to see widespread adoption in the coming years, from both AMD as well as Intel, for CPUs as well as GPUs. This is because Moore’s law–which mandated a doubling in processing power, mainly as a result of die shrinks (56nm to 28nm> 28nm to 14nm>14nm to 7nm) every couple of years–has comprehensively slowed down. Intel has been stuck on the 14nm node for more than half a decade and even now, after 6-7 years, the succeeding 10nm node isn’t twice as faster (and denser) than its preceding 14nm process node.

The ironic thing is that Intel has already adopted the chiplet design for most of its future processors. The Ponte Vecchio Data Center GPU, Xe HP GPUs, and Meteor Lake are all going to leverage chiplets, with the only difference being with respect to the nomenclature. Intel calls them tiles: