Every modern processor features a small amount of cache memory. Over the past few decades, cache architectures have become increasingly complex: The levels of CPU cache have increased to three: L1, L2, and L3, the size of each block has grown and the cache associativity has undergone several changes as well. But before we dive into the specifics, you must know what exactly is cache memory and why is it important? Furthermore, modern processors consist of L1, L2, and L3 cache. What’s the difference between these cache levels?

Cache Memory vs System Memory: SRAM vs DRAM

Cache memory is based on the much faster (and expensive) Static RAM while system memory leverages the slower DRAM (Dynamic RAM). The main difference between the two is that the former is made of CMOS technology and transistors (six for every block) while the latter uses capacitors and transistors.

DRAM needs to be constantly refreshed (due to leaking charges) to retain data for longer periods. Due to this, it draws significantly more power and is slower as well. SRAM doesn’t have to be refreshed and is much more efficient. However, the higher pricing has prevented mainstream adoption, limiting its use to processor cache.

Importance of Cache Memory in Processors?

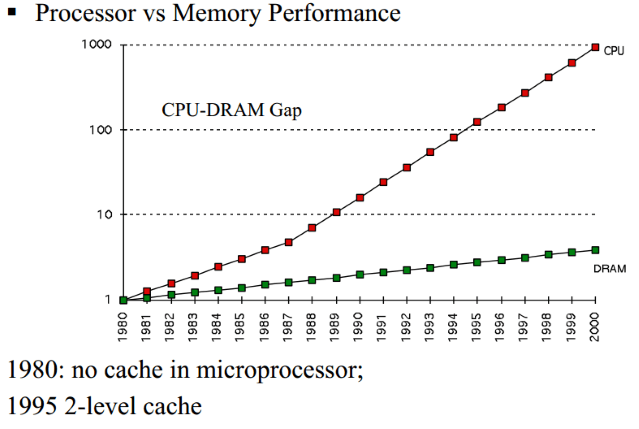

Modern processors are light years ahead of their primitive ancestors that were around in the 80s and early 90s. These days, top-end consumer chips run at well over 4GHz while most DDR4 memory modules are rated at less than 1800MHz. As a result, system memory is too slow to directly work with CPUs without severely slowing them down. This is where the cache memory comes in. It acts as an intermediate between the two, storing small chunks of repeatedly used data or in some cases, the memory addresses of those files.

L1, L2 and L3 Cache: What’s the Difference?

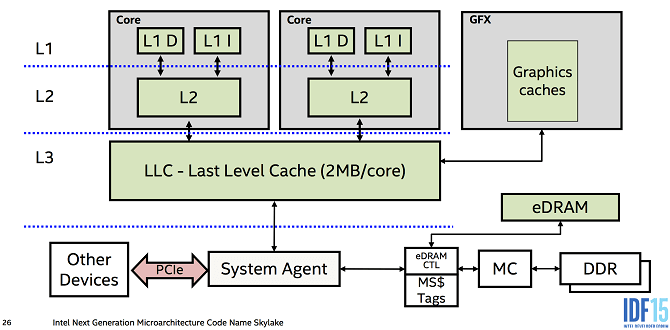

In contemporary processors, cache memory is divided into three segments: L1, L2, and L3 cache, in order of increasing size and decreasing speed. L3 cache is the largest and also the slowest (the 3rd Gen Ryzen CPUs feature a large L3 cache of up to 64MB) cache level. L2 and L1 are much smaller and faster than L3 and are separate for each core. Older processors didn’t include a third-level L3 cache and the system memory directly interacted with the L2 cache:

L1 cache is further divided into two sections: L1 Data Cache and L1 Instruction Cache. The latter contains the instructions that will be consumed by the CPU core while the former is used to hold data that will be written back to the main memory.

L1 cache not only works as the instruction cache, but it also holds pre-decode data and branching information. Furthermore, while the L1 data cache often acts as an output-cache, the L1 instruction cache behaves like an input-cache. This is helpful when loops are engaged as the required instructions are right next to the fetch unit.

Modern CPUs include up to 512KB of L1 cache (64KB per core) for flagship processors while server parts feature almost twice as much.

L2 cache is much larger than L1 but at the same time slower as well. They range from 4-8MB on flagship CPUs (512KB per core). Each core has its own L1 and L2 cache while the last level, the L3 cache is shared across all the cores on a die.

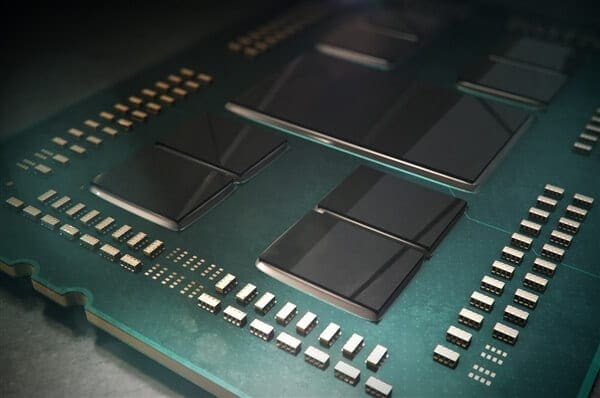

L3 cache is the lowest-level cache. It varies from 10MB to 64MB. Server chips feature as much as 256MB of L3 cache. Furthermore, AMD’s Ryzen CPUs have a much larger cache size compared to rival Intel chips. This is because of the MCM design vs Monolithic on the Intel side. Read more on that here.

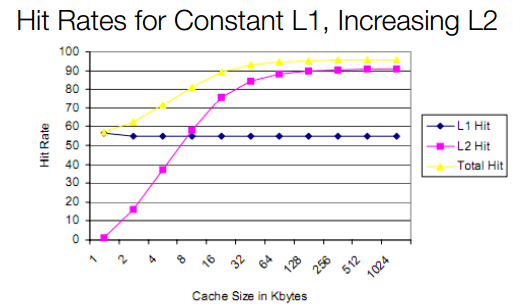

When the CPU needs data, it first searches the associated core’s L1 cache. If it’s not found, the L2 and L3 caches are searched next. If the necessary data is found, it’s called a cache hit. On the other hand, if the data isn’t present in the cache, the CPU has to request it to be loaded onto the cache from the main memory or storage. This takes time and adversely affects performance. This is called a cache miss.

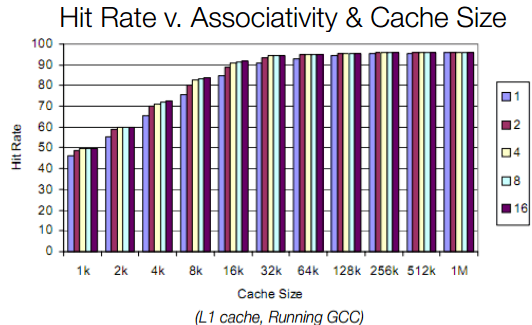

Generally, the cache hit rate improved when the cache size is increased. This is especially true in the case of gaming and other latency-sensitive workloads.

Inclusive vs Exclusive Cache

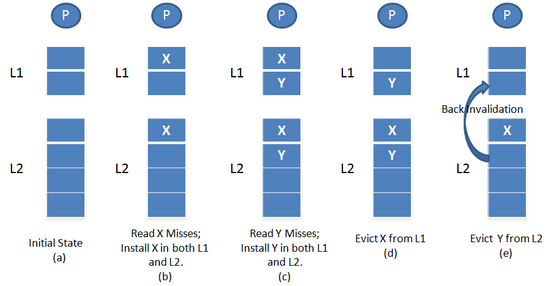

The cache configuration is of two types: inclusive and exclusive cache. If all the data blocks present in the higher-level cache (L1) are present in the lower-level cache (L2), then the low-level cache is known as inclusive of the higher-level cache.

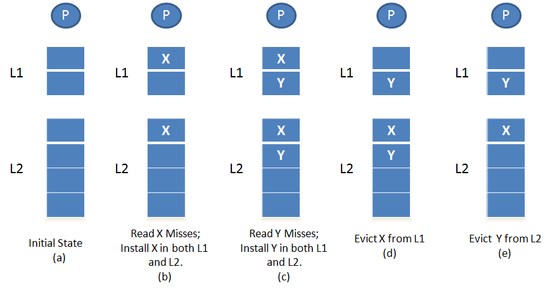

On the other hand, if the lower-level cache only contains data blocks that aren’t present in the higher-level cache, then the cache is said to be exclusive of the higher-level cache.

Consider a CPU with two levels of cache memory. Now, suppose a block X is requested. If the block is found in the L1 cache, then the data is read from the L1 cache and consumed by the CPU core. However, if the block is not found in the L1 cache, but is present in L2, then it’s fetched from the L2 cache and placed in L1.

If the L1 cache is also full, a block is evicted from L1 to make room for the newer block while the L2 cache is unchanged. However, if the data block is found neither in L1 and L2, then it’s fetched from the memory and placed in both the cache levels. In this case, if the L2 cache is full and a block is evicted to make room for the new data, the L2 cache sends an invalidation request to the L1 cache, so the evicted block is removed from there as well. Due to this invalidation procedure, an inclusive cache is slightly slower than a non-inclusive or exclusive cache.

Now, let’s consider the same example with non-inclusive or exclusive cache. Let’s suppose that the CPU core sends a request for block X. If block X is found in L1, then it’s read and consumed by the core from that location. However, if block X is not found in L1, but present in L2, then it’s moved from L2 to L1. If there’s no room in L1, one block is evicted from L1 and stored in L2. This is the only way L2 cache is population, as such acts as a victim cache. If block X isn’t found in L1 or L2, then it’s fetched from the memory and placed in just L1.

Continue reading on the next page…

There’s a third, less commonly used cache policy called non-inclusive non-exclusive (NINE). Here the blocks are neither inclusive nor exclusive of the higher-level cache. Let’s consider the same example one last time. There’s a request for block X and it’s found in L1. Then the CPU core will read and consume this block from the L1 cache. If the block isn’t found in L1 but is present in L2, then it’s fetched from L2 to L1. The L2 cache remains unchanged similar to how inclusive cache works.

However, if the block isn’t found in either cache level, then it’s fetched from the main memory and placed in both L1 and L2. However, if this results in the eviction of a block from L2, unlike inclusive cache, there’s no back invalidation to the L1 cache to nuke the same block from there.

A Look at Memory Mapping

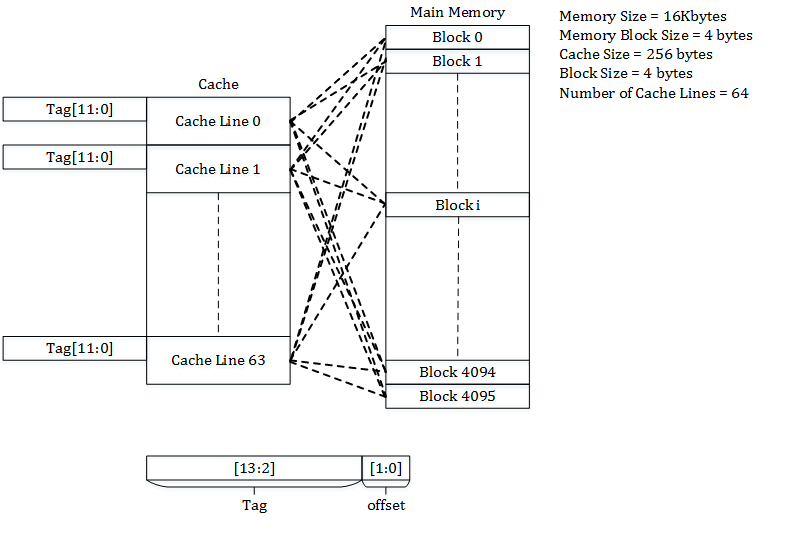

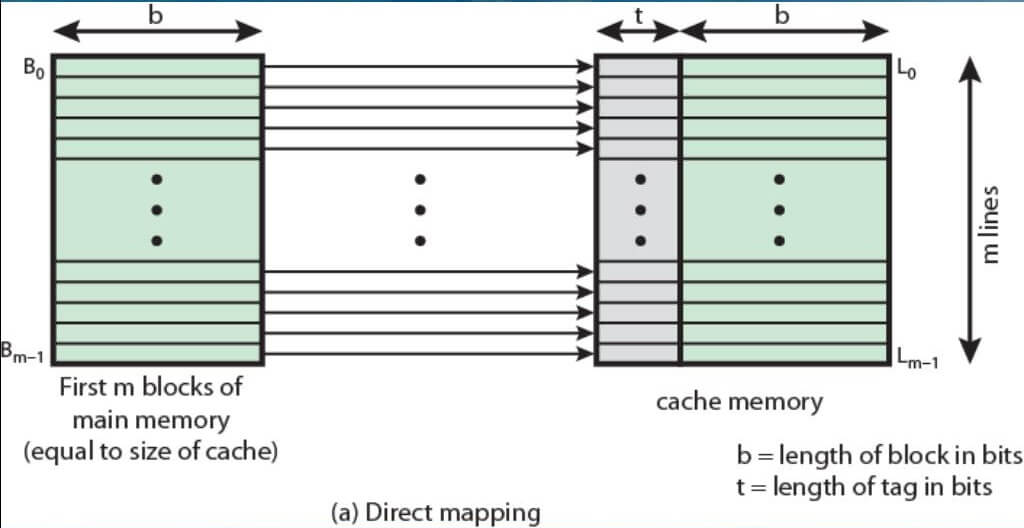

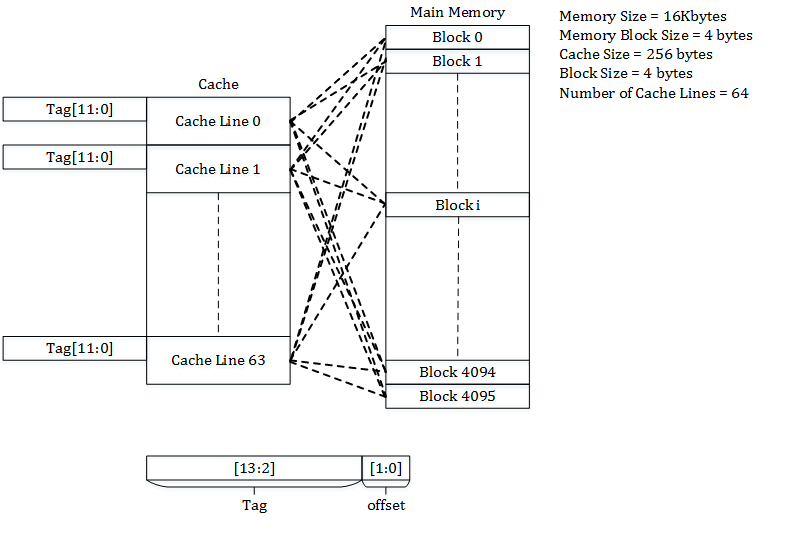

With the basic explanations about cache out of the way, let’s talk about how the system memory talks to the cache memory. This is called cache or memory mapping. The cache memory is divided into blocks or sets. These blocks are in turn divided into n 64-byte lines. The system memory is divided into the same number of blocks (sets) as the cache and then the two are linked.

If you have 1GB of system RAM, then the cache will be divided into 8,192 lines and then separated into blocks. This is called n-way associative cache. With a 2-way associate cache, each block contains two lines each, 4-way includes four lines each, eight lines for 8-way, and sixteen lines for 16-way. Each block in the memory will be 512 KB in size if the total RAM size is 1GB.

If you have 512 KB of 4-way associated cache, the RAM will be divided into 2,048 blocks (8192/4 for 1GB) and linked to the same number of 4-line cache blocks.

In the same way with 16-way associative cache, the cache is divided into 512 blocks linked to 512 (2048 KB) blocks in the memory, each cache block containing 16 lines. When the cache runs out of data blocks, the cache controller reloads a new set of blocks with the required data to continue processor execution.

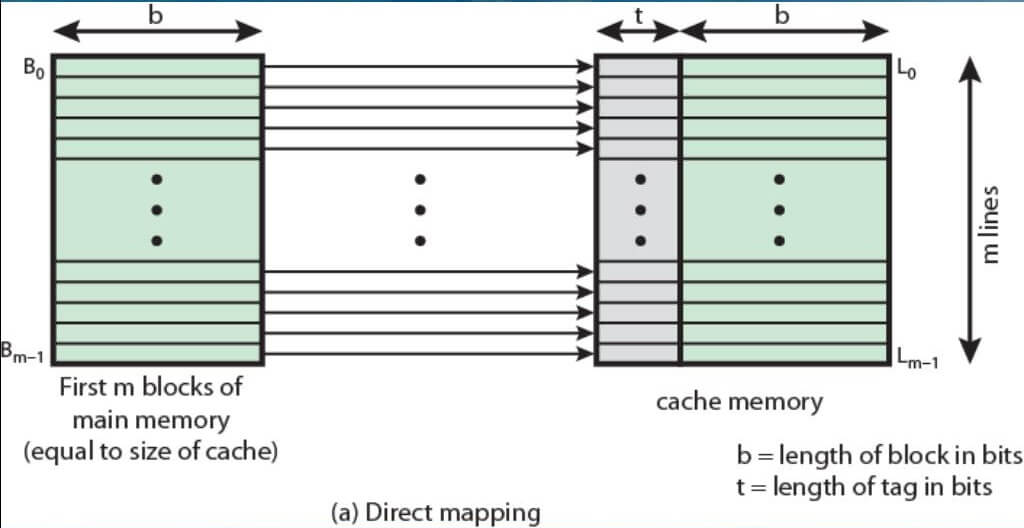

N-way associative cache is the most commonly used mapping method. There are two more methods known as direct mapping and fully associated mapping. In the former, there is hard-linking between the cache lines and memory while in the case of the latter, the cache can contain any memory address. Basically, each line can access any main memory block. This method has the highest hit rate. However, it’s costly to implement and as a result, is mostly avoided by chipmakers.

Which Mapping is the Best?

Direct mapping is the easiest configuration to implement, but at the same time is the least efficient. For example, if the CPU asks for a given memory address (1,000 in this case), the controller will load a 64-byte line from the memory and store it on the cache (1,000 to 1,063). In the future, if the CPU requires data from the same addresses or the addresses right after this one (1,000 to 1,063), they will already be in the cache.

This becomes a problem when the CPU needs two addresses one after the next that are in the memory block mapped to the same cache line. For example, if the CPU first asks for address 1,000 and then asks address 2,000, a cache miss will occur because these two addresses are inside the same memory block (128 KB being the block size). The cache line mapped to it, on the other hand, was a line starting from address 1,000 to 1,063. So the cache controller will load the line from address 2,000 to 2,063 in the first cache line, evicting the older data. That is the reason why direct mapping cache is the least efficient cache mapping technique and has largely been abandoned.

Fully associative mapping is somewhat the opposite of Direct Mapping. There is no hard linking between the lines of the memory cache and the RAM memory locations. The cache controller can store any address. There the above problem doesn’t happen. This cache mapping technique is the most efficient, with the highest hit rate. However, as already explained, it’s the hardest and most expensive to implement.

As a result, set-associative mapping which is a hybrid between fully associative and direct mapping is used. Here, every block of memory is linked to a set of lines (depending on the kind of SA mapping), and each line can hold the data from any address in the mapped memory block. On a 4-way set-associative cache each set on the memory cache can hold up to four lines from the same memory block. With a 16-way config, that figure grows to 16.

When the slots on a mapped set are all used up, the controller evicts the contents of one of the slots and loads a different set of data from the same mapped memory block. Increasing the number of ways a set-associative memory cache has, for example, from 4-way to 8-way, you have more cache slots available per set. However, if you don’t increase the amount of cache, the memory size of each linked memory block increases. Basically, increasing the number of available slots on set cache set without increasing the overall cache size means that set would be linked to a larger memory block, effectively reducing efficiency due to an increased number of flushes.

On the other hand, increasing the cache size means that you’d have more lines in each set (assuming the set size is also increased). This means a higher number of linked cache lines for every memory block. Generally, this increases the hit rate but there’s a limit to how much it can improve the overall figure.

13th Gen i9-13900K is ~30% Slower at Intel Spec than Board Partner “Optimized” Power Limits

13th Gen i9-13900K is ~30% Slower at Intel Spec than Board Partner “Optimized” Power Limits AMD Ryzen 9000 “Strix Point” CPU: Nearly As Fast as Intel’s Core Ultra “Meteor Lake” at 1.4 GHz

AMD Ryzen 9000 “Strix Point” CPU: Nearly As Fast as Intel’s Core Ultra “Meteor Lake” at 1.4 GHz ASUS X670/B650/A620 Motherboards are Ready for AMD’s Ryzen 9000 “Zen 5” CPUs

ASUS X670/B650/A620 Motherboards are Ready for AMD’s Ryzen 9000 “Zen 5” CPUs 14th Gen Core CPUs 10%+ Slower with Intel’s Spec Power Limits, Falls Behind the Ryzen 9 7950X

14th Gen Core CPUs 10%+ Slower with Intel’s Spec Power Limits, Falls Behind the Ryzen 9 7950X