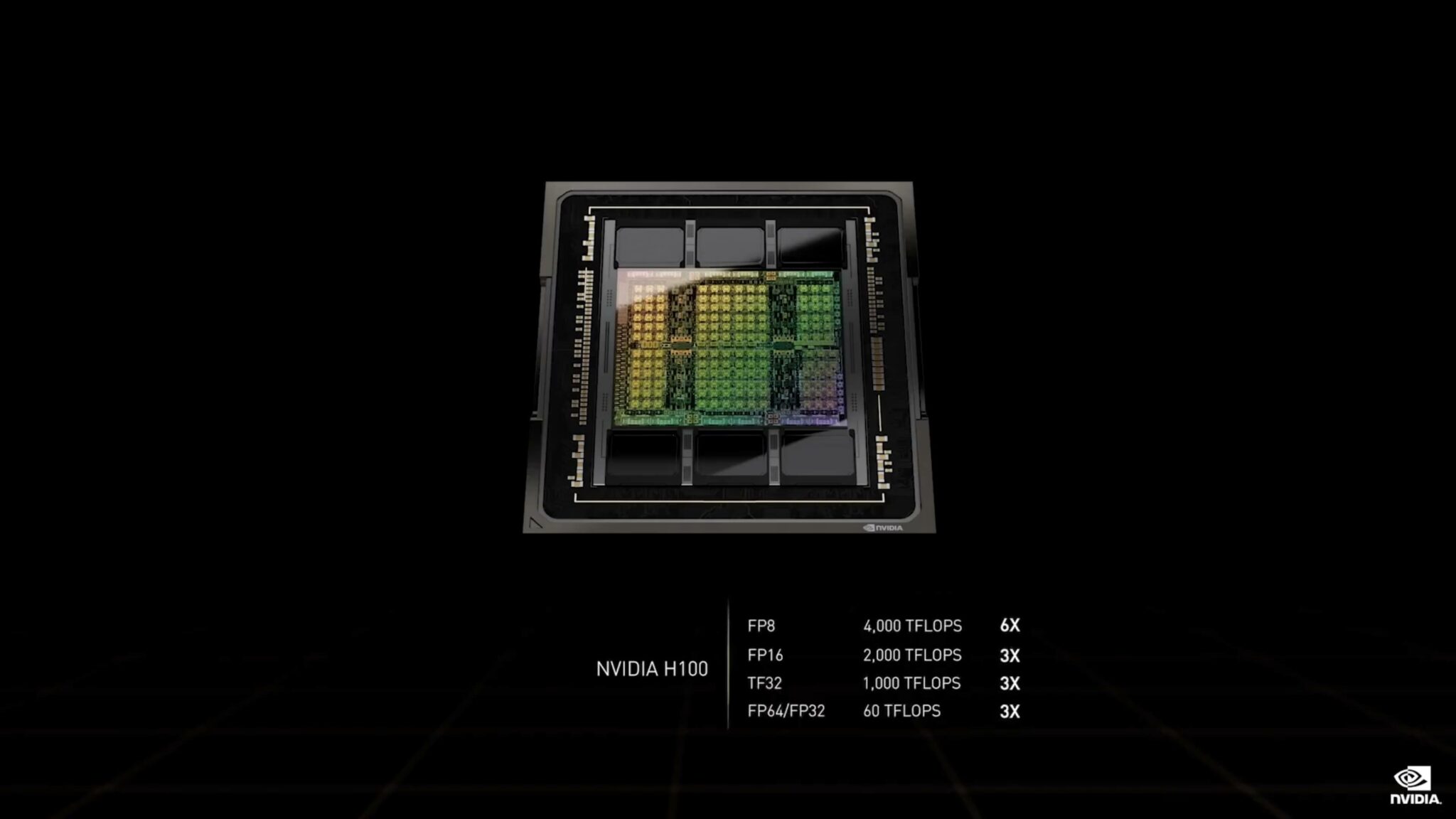

NVIDIA announced its much anticipated Hopper data center graphics architecture today. Retaining the building blocks of the GA100 “Ampere” die, the GH100 significantly expands its low precision compute capabilities. We’re looking at an incredible 4000 TFLOPs of INT4, 2000 TFLOPs of INT8, 1000 TFLOPs of BF16 and FP16, and a respectable 500 TFLOPs of TF32 performance when leveraging sparse matrices.

The non-matrix performance is less impressive, promising 60 TFLOPs of FP64, 60 TFLOPs of FP32, and 120 TFLOPs of FP16/BF16 compute. In comparison, AMD’s recently launched Instinct MI250X boasts an incredible 96 TFLOPs of FP32 and 47 TFLOPs of FP64 compute performance.

Going by the specs on paper, NVIDIA has invested heavily in integer matrix multiplication, offering 10x more performance than the MI250X in these workloads (4000/2000 TFLOPs vs 383 TFLOPs). AMD, on the other hand, has focused on traditional FP32 and FP64 performance.

Internally, the FP64 and INT32 core counts are unchanged, but FP32 has been bumped up to 128 per SM, just like Ampere and Ada. There are four Tensors per SM for a total of 528 for the entire H100 GPU. For memory, we’re looking at a moderate 60MB of L3 cache and (up to) six 512-bit HBM2e memory stacks. The memory is said to be clocked at 1600MHz.

| Data Center GPU | NVIDIA Tesla P100 | NVIDIA Tesla V100 | NVIDIA A100 | NVIDIA H100 |

|---|---|---|---|---|

| GPU Codename | GP100 | GV100 | GA100 | GH100 |

| GPU Architecture | NVIDIA Pascal | NVIDIA Volta | NVIDIA Ampere | NVIDIA Hopper |

| SMs | 56 | 80 | 108 | 132 |

| TPCs | 28 | 40 | 54 | 66 |

| FP32 Cores / SM | 64 | 64 | 64 | 128 |

| FP32 Cores / GPU | 3584 | 5120 | 6912 | 16896 |

| FP64 Cores / SM | 32 | 32 | 32 | 32 |

| FP64 Cores / GPU | 1792 | 2560 | 3456 | 8448 |

| INT32 Cores / SM | NA | 64 | 64 | 64 |

| INT32 Cores / GPU | NA | 5120 | 6912 | 8448 |

| Tensor Cores / SM | NA | 8 | 42 | 4 |

| Tensor Cores / GPU | NA | 640 | 432 | 528 |

| Texture Units | 224 | 320 | 432 | 528 |

| Memory Interface | 4096-bit HBM2 | 4096-bit HBM2 | 5120-bit HBM2 | 512-bit x5 |

| Memory Size | 16 GB | 32 GB / 16 GB | 40 GB | 128GB? |

| Memory Data Rate | 703 MHz DDR | 877.5 MHz DDR | 1215 MHz DDR | 1600 MHz DDR? |

| Memory Bandwidth | 720 GB/sec | 900 GB/sec | 1555 GB/sec | ? |

| L2 Cache Size | 4096 KB | 6144 KB | 40960 KB | 60MB |

| TDP | 300 Watts | 300 Watts | 400 Watts | 700W |

| TSMC Manufacturing Process | 16 nm FinFET+ | 12 nm FFN | 7 nm N7 | 4 nm N4 |

The other highlight is the inclusion of PCIe Gen 5 and the NVLink bus interface, enabling up to 900GB/s of GPU-to-GPU bandwidth. Overall, the H100 offers a substantial 4.9 TB/s of external bandwidth. Finally, this monster GPU has a TDP of 700W despite featuring TSMC’s N4 node. The 4nm (N4) process node is a refinement of the N5 (5nm) node.