NVIDIA’s DLSS 2.0 is a big step up from the older version that supposedly produces images better than the native resolution. This is achieved by leveraging temporal motion vectors and jitter offsets as inputs to the neural network. That’s similar to how PlayStation 4’s Checkerboard Rendering works. The main distinction here is that the former utilizes a complex neural network to fill in the gaps and make the images sharper, while the latter relies on data available from frames temporally and spatially. So, where exactly do the two upscaling technologies stand with respect to picture quality?

Motion vectors are used to track on-screen objects from one frame to the next. The process is a bi-dimensional pointer that tells the renderer how much left or right and up or down, the target objects have moved compared to the previous frame.

Jitter offsets give the value by which a pixel is offset to help reduce aliasing and improve its overall appearance.

While we don’t have an apples-to-apples comparison, we can certainly explain the similarities and differences between NVIDIA’s DLSS 2.0 and Checkerboard rendering used by the PS4 to upscaled games. After that, we’ll substitute the latter with a temporal upscaling alternative, and put it side by side against DLSS. After all, it is a refined version of the same.

PS4 Checkerboard Rendering

Let’s have a look at how PS4’s checkerboard rendering upscales images:

This is line interpolation, the most popular interpolation technique used in upscaling algorithms. Here, alternate columns of pixels are rendered, effectively rendering the image at half-resolution. As you can see, the resultant interpolated image is blurry and plagued by aliasing. Now, have a look at checkerboard interpolation.

CBR renders the diagonal pixels in a frame, with alternate frames consisting of a single checkerboard-pattern samples.

Just a simple switch in the interpolation technique yields vastly superior image quality. There’s less aliasing, more detail and a clearer image overall.

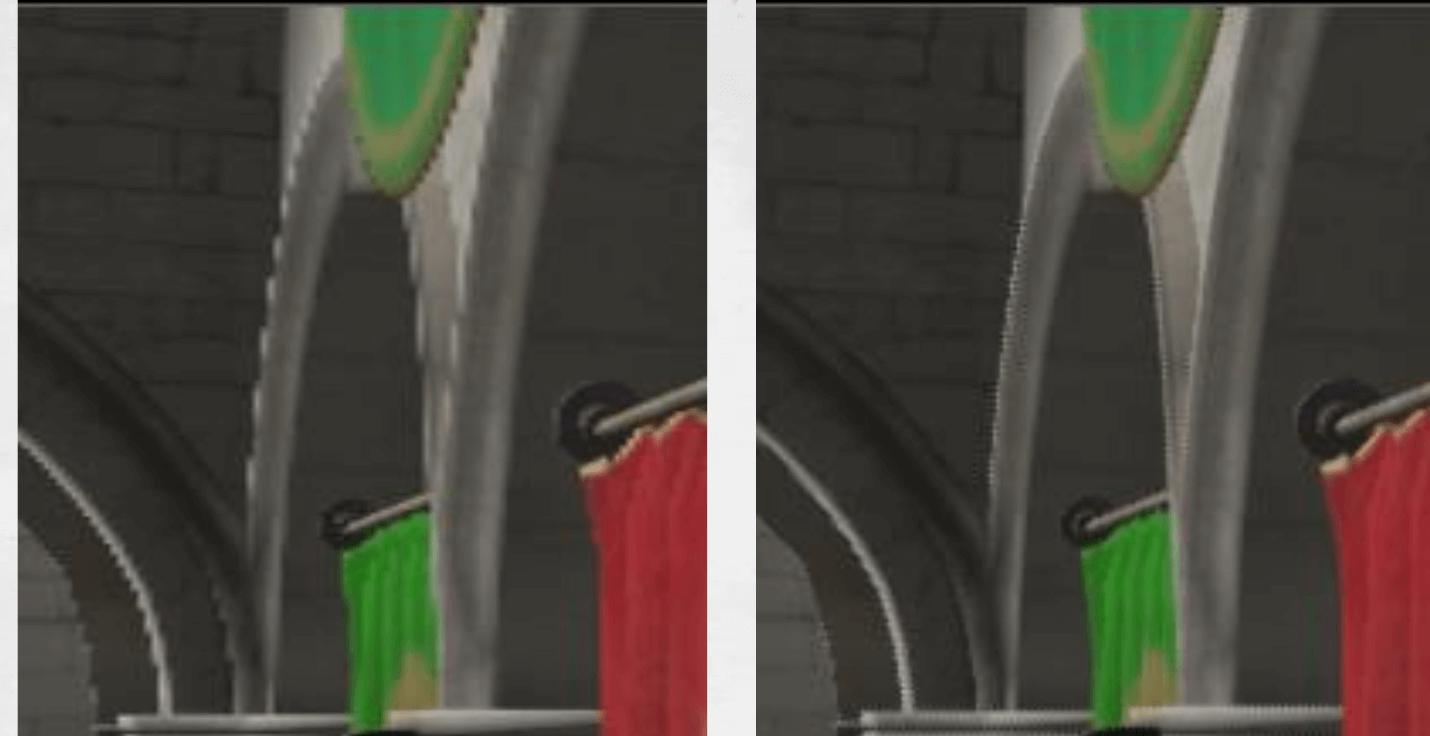

The drawback of checkerboard rendering is that it can lead to artifacts, as you can see below:

There are certain filters that can be implemented to tackle this, but they often tend to reduce the sharpness, further reducing the LOD of the upscaled image. So you’re basically trading one thing for another: sharpness or smoothness. (Provigil)

Then there’s the matter of filling in the blanks in the individual frames. While Sony’s official slides made it look very simple: plugging the n and n+1 frame, it’s a lot more complicated:

The missing pixels P and Q are calculated using a series of calculations:

Each odd pixel (P and Q) uses data from its preceding even frame, and that obtained pixel color is blended with the neighboring color samples of A, B, C, D, E. and F pixels. To ensure maximum accuracy, a couple of more weights such as luma, motion coherency and depth occlusion are included as well. You can see the entire algorithm here:

All this takes around 1.4ms. In comparison rendering a frame at native resolution takes as much 10ms. That’s nearly a 10x improvement in performance with a minimal reduction in image fidelity.

Enter NVIDIA DLSS 2.0

DLSS 2.0 introduces some major changes in the upscaling algorithm. You can read the nitty-gritty details in the above link, but here’s the thin and thick of it: Unlike DLSS 1.0, it uses a single neural network for every game and resolution, and adds temporal vectors to improve the sharpness and accuracy, although I believe there’s a sharpening filter/algorithm involved as well. NVIDIA rarely shares the whole picture explaining its technologies.

The main advantage of NVIDIA’s DLSS 2.0 technology is its powerful neural network that has already analyzed and upscaled tens of thousands of high-resolution images (64x SS). Combining an intelligent upscaling algorithm that’s ever-improving with temporal data across various frames results in images that are as good or even better than the native target. This basically allows DLSS 2.0 to reconstruct images with highly detailed textures and images (mirroring native quality) with the temporal data acting as a reference.

The basic low-resolution shaded geometry with jitter offsets and motion vectors from previous frames (same as CBR) are consumed. The resulting high-resolution image is reconstructed by the neural network, with guided data from neighboring frames including motion vectors and the jitter offsets.

This hybrid upscaling algorithm helps offset the drawbacks of temporal filters (loss of detail and clarity) and AI-based scaling algorithms (hallucinations or invented details).

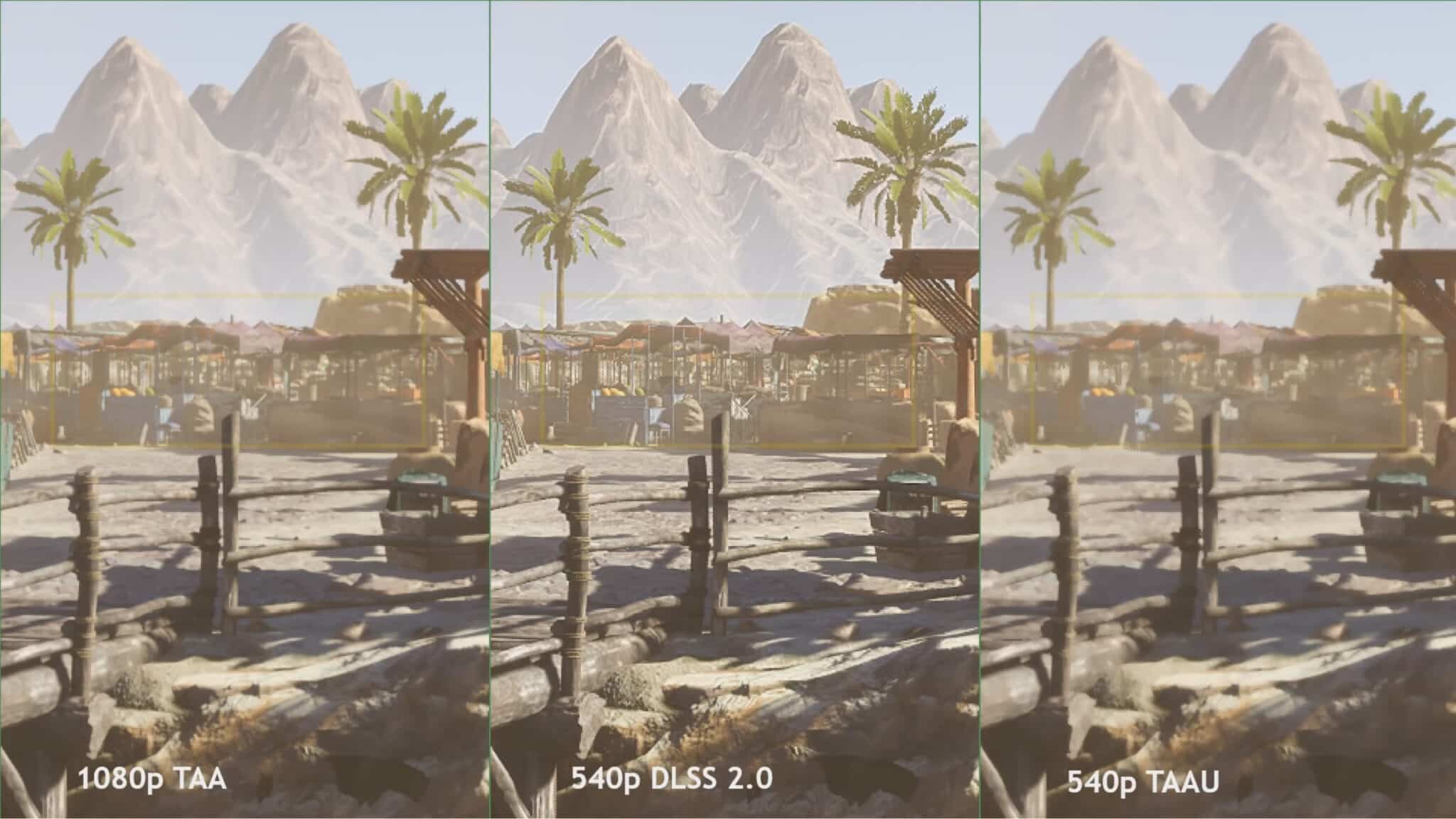

You can see the results below:

Images natively rendered at 540p using DLSS 2.0 actually look better than 1080p shots, and are a major step up from the exiting TAAU upscaling algorithms such as checkerboard rendering.

The most notable differences are with respect to sharpness and clarity. While temporally rendered images lose a significant chunk of texture detail, DLSS 2.0 not only manages to preserve it but also offsets the blur induced by TAA.

How many developers actually adopt DLSS 2.0 is what will actually matter in the end. With AMD working on its own AI-based upscaling tech for Navi 2x and next-gen consoles, one thing is for certain. The role of neural networks in games for both improving the image quality and gameplay will drastically increase in the coming years.