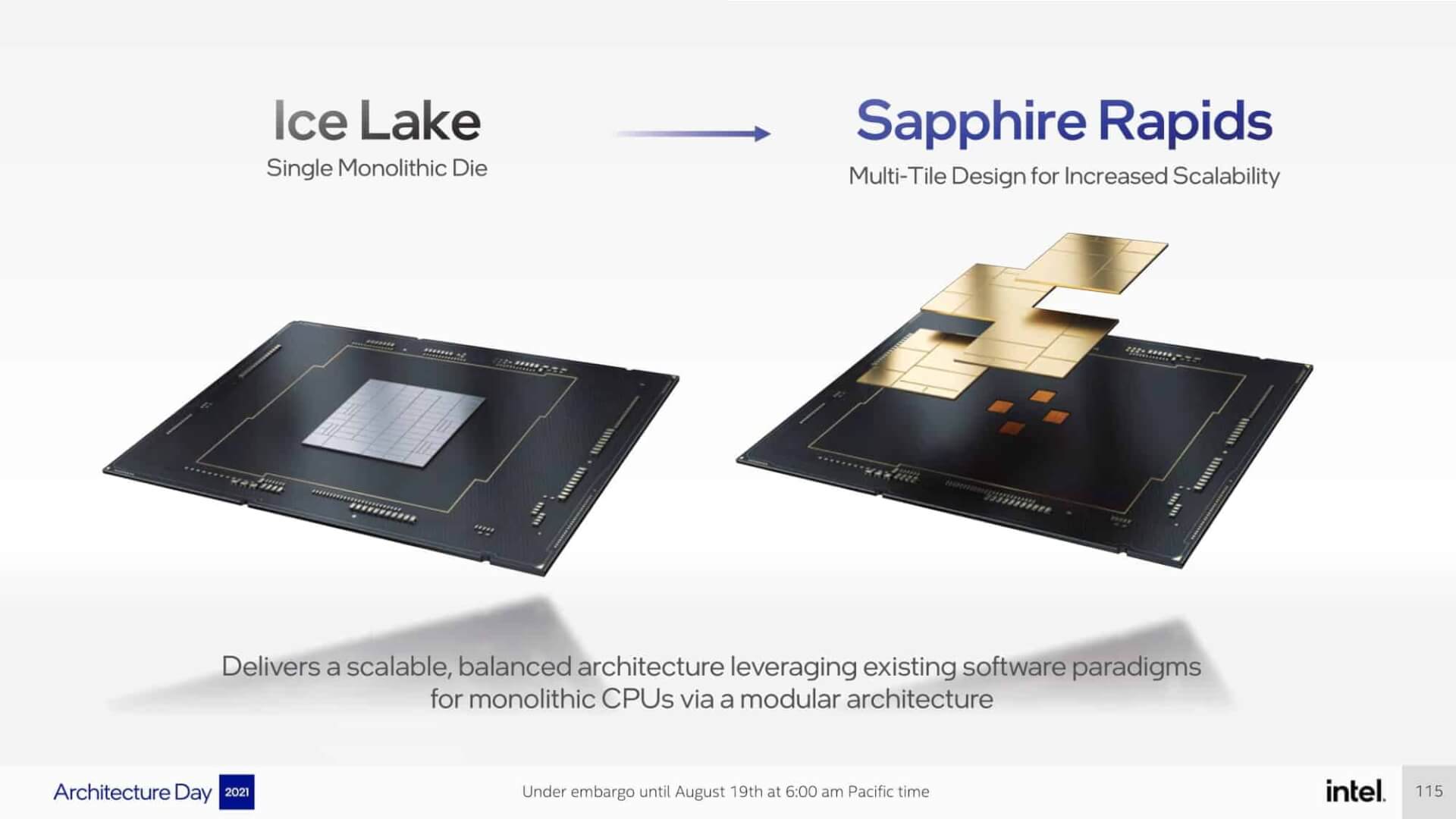

Intel is gearing up to launch its server and HEDT processors later this year based on the potent Golden Cove core architecture. The 4th Gen Xeon Scalable lineup, codenamed Sapphire Rapids-SP will be the first chiplet (MCM or tiled, call it what you want) based design featuring up to 56 cores across four 15 core tiles. Each die will have one core fused off to improve yields. Like Ice Lake-SP, AVX-512 and the newly added AMX instructions will be Intel’s key advantages against AMD’s Epyc Milan, Milan-X, and the soon to be launched Genoa lineups.

There’s been a major discovery though. Turns out Intel will be gating AVX-512 and AMX instructions behind a paywall of sorts, much like paid DLCs in video games. The feature known as Software Defined Silicon “SDSi” will be enabled primarily on server and data center nodes via the Linux kernel, allowing vendors to offer special “accelerators” or “add-ons” to clients for a price.

AVX-512 is primarily leveraged in certain data center workloads with densely packed instructions, allowing for a doubling in execution throughput compared to AVX2. On the downside, not many applications support AVX-512, and even if they do, the added performance comes at the cost of significantly increased power draw.

AMX, on the other hand, is similar to NVIDIA’s Tensor cores, accelerating matrix multiplication and other related 2D data types. These run in parallel with AVX-512 and other traditional x86 instructions without affecting the primary pipeline.

According to the source (YuuKi_Ans), in addition to AVX-512 and AMX, certain features of the HBM2e on-die memory will also be an add-on feature. We already know that the HBM memory will be usable in flat mode or cache mode, in addition to traditional. It’s likely that these features will be gated out of the box.

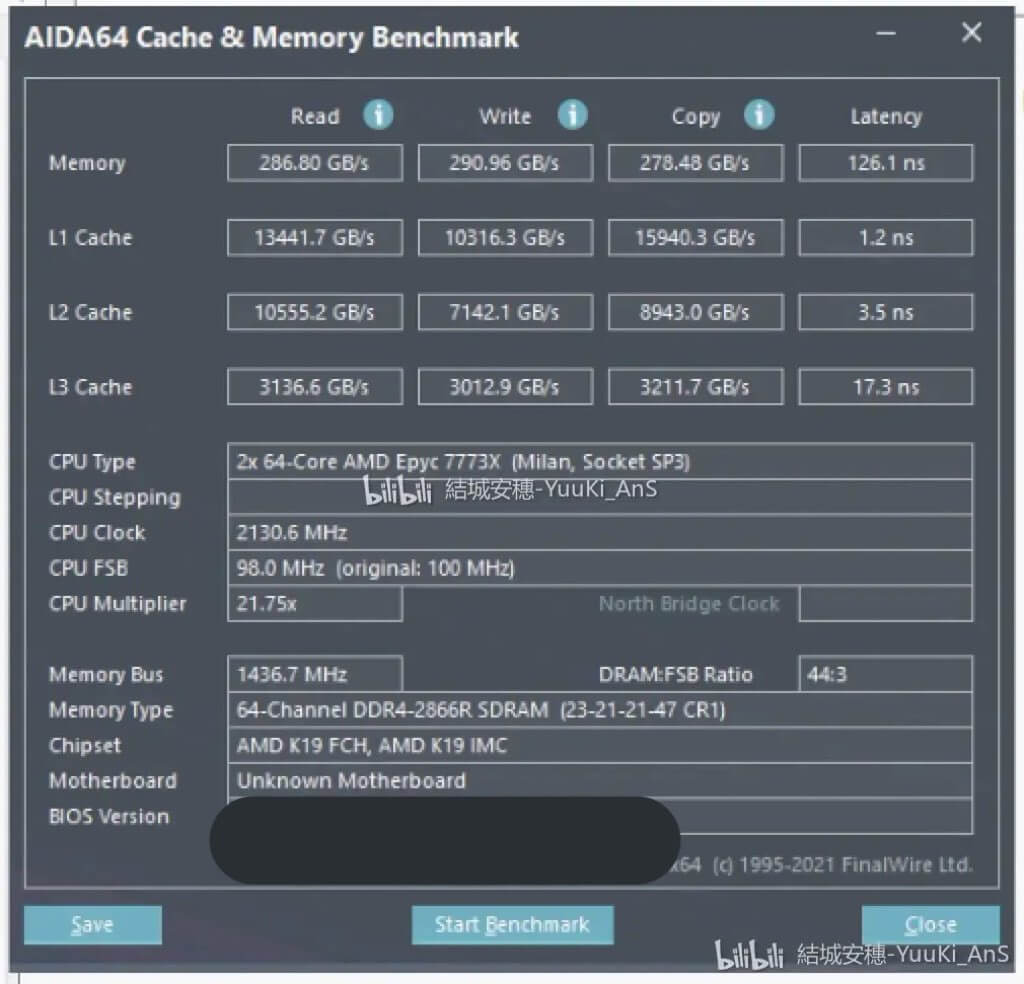

Looking at the memory latency of the sample shared by the source, it’s clear that Intel has massively beefed up the memory bandwidth, making it (nearly) twice as wide as AMD’s Epyc Milan-X and 50% faster than its Ice Lake predecessor. It’s unclear whether this is the result of the faster/larger cache or the inclusion of HBM2e memory. The same can be said for the cache, albeit the latency has taken a hit across the board.