DirectX 12 debuted two years back, promising significant performance and efficiency boosts across the board. This includes better CPU utilization, closer to metal access as well as a host of new features, most notably ray-tracing or DXR (DirectX Ray-tracing). But what exactly is DirectX 12, and how is it different from DirectX 11? Let’s have a look.

What is DirectX: It’s an API

Like Vulkan and OpenGL, DirectX is an API that allows you to run video games on your computer. However, unlike its counterparts, DX is a Microsoft proprietary platform and only runs on Windows natively. OpenGL and Vulkan, on the other hand, run on Mac as well as Linux.

What does a graphics API like DirectX do? It acts as an intermediate between the game engine and the graphics drivers, which in turn interact with the OS Kernel. A graphics API is a platform where the actual game design and mechanics are figured out. Think of it as MS Paint, where the game is the painting and the paint application is the API. However, unlike Paint, the output program of a graphics API is only readable by the API used to design it. In general, an API is designed for a specific OS. That’s the reason why PS4 games don’t run on the Xbox One and vice versa.

DirectX 12 Ultimate is the first graphics API that breaks that rule. It will be used on both Windows and the next-gen Xbox Series X. With DX12 Ultimate, MS is basically integrating the two platforms.

DirectX 11 vs DirectX 12: What Does it Mean for PC Gamers

There are three main advantages of the DirectX 12 API for PC gamers:

Better Scaling with Multi-Core CPUs

One of the core advantages of low-level APIs like DirectX 12 and Vulkan is improved CPU utilization. Traditionally with DirectX 9 and 11-based games, most games only used 2-4 cores for the various mechanics: Physics, AI, draw-calls, etc. Some games were even limited to one, but with DirectX 12, that has changed. The load is more evenly distributed across all cores, making multi-core CPUs more relevant for gamers.

Maximum hardware utilization

Many of you might have noticed that in the beginning, AMD GPUs favored DirectX 12 titles more than rival NVIDIA parts. Why is that?

The reason is better utilization. Traditionally, NVIDIA has had much better driver support, while AMD hardware has always suffered from the lack thereof. DirectX 12 adds many technologies to improve utilization, such as asynchronous compute, which allows multiple stages of the pipeline to be executed simultaneously (read: Compute and Graphics). This makes poor driver support a less pressing concern.

Closer to Metal Support

Another major advantage of DirectX 12 is that developers have more control over how their game utilizes the hardware. Earlier, this was more abstract and was mostly taken care of by the drivers and the API (although some engines like Frostbyte and Unreal provided low-level tools as well).

Now the task falls to the developers. They have closer to metal access, meaning that most of the rendering responsibilities and resource allocation are handled by the game engines with some help from the graphics drivers.

This is a double-edged sword as there are multiple GPU architectures out in the wild, and for indie devs, it’s impossible to optimize their game for all of them. Luckily, third-party engines like Unreal, CryEngine, and Unity do this for them, and they only have to focus on designing.

How DirectX 12 Improves Performance by Optimizing Hardware Utilization

Per-Call API Context

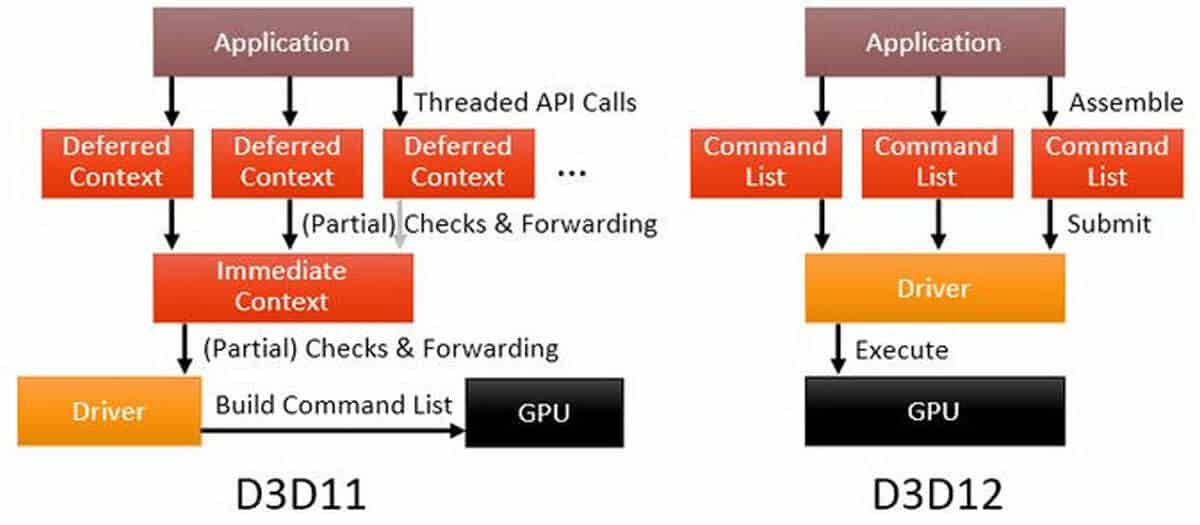

Like every application, graphics APIs like DirectX also feature a primary thread that keeps track of the internal API state (resources, their allocation, and availability). With DirectX 9 and 11, there’s a global state (or context). The games you run on your PC modify this state via draw calls to the API, after which it’s submitted to the GPU for execution. Since there’s a single global state/context (and a single main thread on which it’s run), it is difficult to multi-thread, as multiple draw calls simultaneously can cause errors. Furthermore, modifying the global state via state calls is a relatively slower process, further complicating the entire process.

With DirectX 12, the draw calls are more flexible. Instead of a single global state (context), each draw call from the application has its own smaller state (see PSOs below for more). These draw calls contain the required data and associated pointers within and are independent of other calls and their states. This allows the use of multiple threads for different draw calls.

Pipeline State Objects

In DirectX 11, the objects in the GPU pipeline exist across a wide range of states, such as Vertex Shader, Hull Shader, Geometry Shader, etc. These states are often interdependent on one another, and the next successive one can’t progress unless the previous stage is defined. When the geometry from a scene is sent to the GPU for rendering, the resources and hardware required can vary depending on the rasterizer state, blend state, depth stencil state, culling, etc.

Each of the objects in DirectX 11 needs to be defined individually (at runtime), and the next state can’t be executed until the previous one has been finalized as they require different hardware units (shaders vs ROPs, TMUs, etc). This effectively leaves the hardware under-utilized, resulting in increased overhead and reduced draw calls.

In the above comparison, HW state 1 represents the shader code, and 2 combines the rasterizer and the control flow linking the rasterizer to the shaders. State 3 is the linkage between the blend and pixel shader. The Vertex Shader affects HW states 1 & 2, the Rasterizer state 2, the Pixel shader states 1-3, and so on. As already explained in the above section, this introduces some additional CPU overhead as the driver generally prefers to wait till the dependencies are resolved.

DirectX 12 replaces the various states with Pipeline State Objects (PSO), which are finalized upon creation itself. A PSO, in simple words, is an object that describes the state of the draw call it represents. An application can create as many PSOs as required and can switch between them as needed. These PSOs include the bytecode for all shaders, including vertex, pixel, domain, hull, and geometry shader, and can be converted into any state as per requirement without depending on any other object or state.

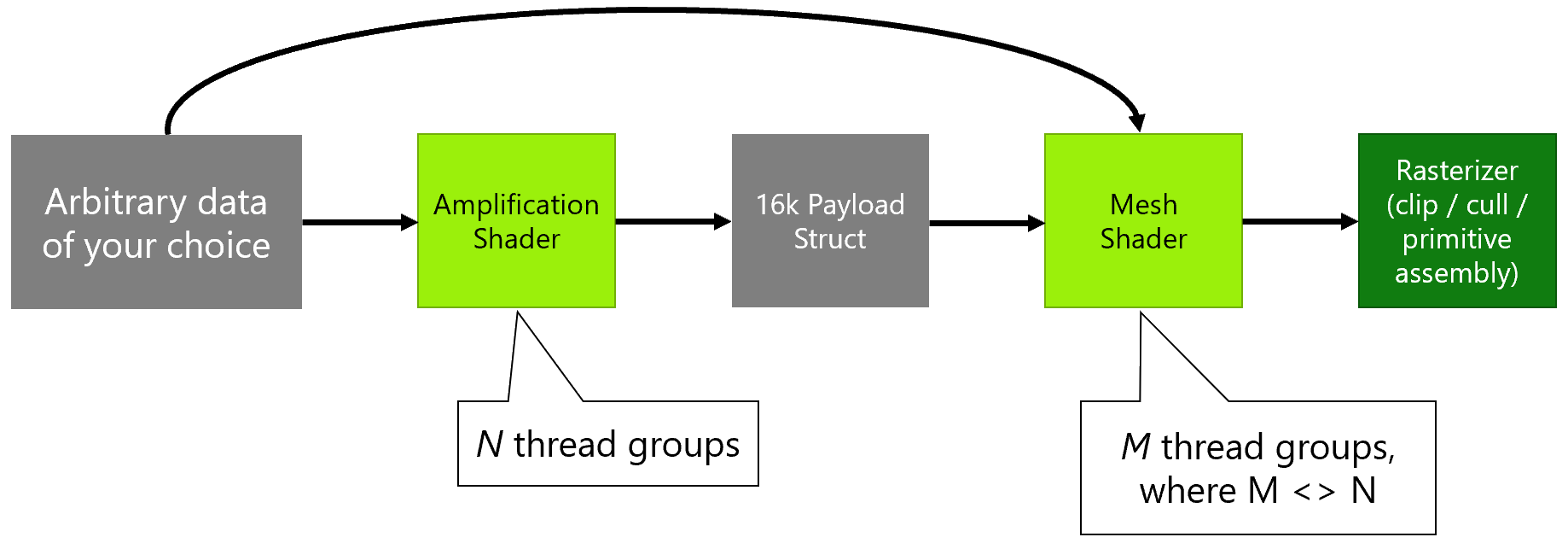

NVIDIA and AMD’s latest GPUs, with the help of DirectX 12 introduce Task Shaders and Mesh Shaders. These two new shaders replace the various cumbersome shader stages involved in the DX11 pipeline for a more flexible approach.

The mesh shader performs the same task as the domain and geometry shaders but internally it uses a multi-threaded instead of a single-threaded model. The task shader works similarly. The major difference here is that while the input of the hull shader was patches and the output of the tessellated object, the task shader’s input and output are user-defined.

In the below scene, there are thousands of objects that need to be rendered. In the traditional model, each of them would require a unique draw call from the CPU. However, with the task shader, a list of objects using a single draw call is sent. The task shader then processes this list in parallel and assigns work to the mesh shader (which also works synchronously) after which the scene is sent to the rasterizer for 3D to 2D conversion.

This approach helps reduce the number of CPU draw calls per scene significantly, thereby increasing the level of detail.

Mesh shaders also facilitate the culling of unused triangles. This is done using the amplification shader. It runs prior to the mesh shader and determines the number of mesh shader thread groups needed. They test the various meshlets for possible intersections and screen visibility and then carry out the required culling. Geometry culling at this early rendering stage significantly improves performance. You can read more here…

NVIDIA’s Mesh and Hull Shaders also leverage DX12

Command Queue

With DirectX 11, there’s only a single queue going to the GPU. This leads to uneven load distribution across various CPU cores, essentially crippling multi-threaded CPUs.

Continue reading on the next page…

This is somewhat alleviated by using a deferred context, but even then, ultimately, there’s only one stream of commands leading to the CPU at the final stage. DirectX 12 introduces a new model that uses command lists that can be executed independently, increasing multi-threading. This includes dividing the workload into smaller commands requiring different resources, allowing simultaneous execution. This is how Asynchronous Compute works by dividing the compute and graphics commands into separate queues and executing them concurrently.

Resource Binding

In DirectX 11, resource binding was highly abstract and convenient but not the best in terms of hardware utilization. It left many of the hardware components unused or idle. Most game engines would use “view objects” to allocate resources and bind them to various shader stages of the GPU pipeline.

The objects would be bound to slots along the pipeline at draw time, and the shaders would derive the required data from these slots. The drawback of this model is that when the game engine needs a different set of resources, the bindings are useless and must be reallocated.

DirectX 12 replaces the resource views with descriptor heaps and tables. A descriptor is a small object that contains information about one resource. These are grouped together to form descriptor tables that are stored in a heap.

Ideally, a descriptor table stores information about one type of resource, while a heap contains all the tables required to render one or more frames. The GPU pipeline accesses this data by referencing the descriptor table index.

As the descriptor heap already contains the required descriptor data, in case a different set of resources is needed, the descriptor table is switched, which is much more efficient than rebinding the resources from scratch.

Other features that come with the DirectX 12 are:

DirectX Raytracing (DXR): This is essentially the API support for real-time ray-tracing that NVIDIA so lovingly calls RTX.

Variable Rate Shading: Variable Rate Shading allows the GPU to focus on areas of the screen that are more “visible” and affected per frame. In a shooter, this would be the space around the cross-hair. In contrast, the region near the screen’s border is mostly out of focus and can be ignored (to some degree).

It allows the developers to focus more on the areas that actually affect the apparent visual quality (the center of the frame in most cases) while reducing the shading in the peripheries.

VRS is of two types: Content Adaptive Shading and Motion Adaptive Shading:

CAS allows individual shading of each of the 16×16 screen tiles (tiled rendering), allowing the GPU to increase the shading rate in regions that stand out while reducing them in the rest.

Motion adaptive shading is as it sounds. It increases the shading rate of objects that are in motion (changing every frame) while reducing that of relatively static objects. In the case of a racing game, the car will get increased shading while the sky and off-road regions will be given reduced priority.

Multi-GPU Support: DirectX 12 has support for two types of multi-GPU support, namely implicit and explicit. Implicit is essentially SLI/XFX and leaves the job to the vendor driver. Explicit is more interesting and lets the game engine control how the two GPUs function in parallel. This allows for better scaling and mixing and matching different GPUs, even ones from different vendors (including your dGPU and iGPU).

Another major advantage is that the VRAM images of the two GPUs aren’t mirrored and can be stacked to double the video memory. This and many other features make DirectX 12 a major upgrade to the software side of PC gaming.

DirectX 12 Ultimate: How is it Different from DirectX 12?

DirectX 12 Ultimate is an incremental upgrade over the existing DirectX 12 (tier 1.1). Its core advantage is cross-platform support: Both the next-gen Xbox Series X and the latest PC games will leverage it. This not only simplifies cross-platform porting but also makes it easier for developers to optimize their games for the latest hardware.

By the time the Xbox Series X arrived last year, game developers had already had enough time with hardware using the same graphics API (NVIDIA’s Turing), simplifying the porting and optimization process. At the same time, this also allows for better utilization of the latest PC hardware, thereby improving performance. All in all, it’s another step by Microsoft to unify the Xbox and PC gaming platforms.

It also introduces DirectX Raytracing 1.1, Sampler Feedback, Mesh Shaders, and Variable Rate Shading. The last two were already supported by NVIDIA’s RTX Turing GPUs (and are explained above), but now they will be widely adopted in newer games and developers.

Texture Sampler Feedback: TSF is something MS is really stressing about. Simply put, it keeps track of the textures (MIP Maps) that are displayed in the game and which are not. Consequently, the unused ones are evicted from the memory, resulting in a net benefit of up to 2.5x to the overall VRAM usage. In the above image, you can see that on the right (without TSF), the entire texture resources for the globe are loaded into the memory. With TSF on the left, only the part that’s actually visible on the screen is kept while the unused bits are removed, thereby saving valuable memory.

This can be done across frames as well (temporally). In a relatively static image, objects in the distance can reuse shading over multiple frames, for example, over each two to four frames and even more. The graphics performance saved can be used to increase the quality of nearby objects or places that have a more apparent impact on quality.

DXR 1.1 is a minor upgrade over the existing 1.0 version:

- Raytracing is now fully GPU controlled and doesn’t require drawing calls from the CPU, reducing the CPU overhead and improving performance.

- New raytracing shaders can be loaded as and when needed, depending upon the player’s location in the game world.

- Inline raytracing is one of the core additions to DirectX 12 Ultimate. It gives developers more control over the raytracing process. It’s available at any stage of the rendering pipeline and is feasible in cases where the shading complexity is minimal.

Continue reading about DX12 Ultimate here…

Intel Adds Support for Battlemage G21 to oneAPI: Arc B380 Launch Soon?

Intel Adds Support for Battlemage G21 to oneAPI: Arc B380 Launch Soon? NVIDIA RTX 5080 Allegedly as Fast as the RTX 4090D: Likely to Cost More than $1000

NVIDIA RTX 5080 Allegedly as Fast as the RTX 4090D: Likely to Cost More than $1000 NVIDIA Testing RTX 5090 “Blackwell” Coolers with Wattage of up to 600W

NVIDIA Testing RTX 5090 “Blackwell” Coolers with Wattage of up to 600W NVIDIA RTX 5080 Allegedly Launching Ahead of the RTX 5090 in H2 2024

NVIDIA RTX 5080 Allegedly Launching Ahead of the RTX 5090 in H2 2024